Member-only story

Running the local DeepSeek

Hot potato Deepseek. While we’ve been shocked by DeepSeek’s capabilities and financial efficiency, we are worried about data privacy security concerns.

It is not an always answer though, running something locally might free you from security and privacy concerns.

Ollama

Ollama is an open-source framework designed to facilitate the deployment of large language models in the local environment.

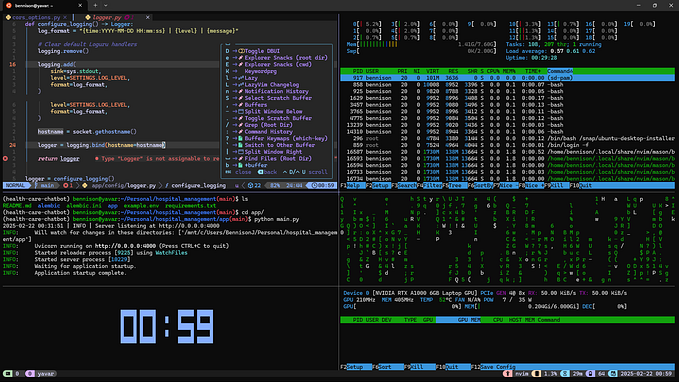

Set up (Linux)

Local deployment: Unlike cloud-based AI services, Ollama allows you to run LLMs directly on your local machine. You can have powerful AI models without relying on internet connectivity or cloud services.

Supported Platforms: Linux, Windows, MacOS.

Hardware requirement: To run Ollama efficiently, GPU is recommended but it can be running on CPU alone. (You might feel very slow). Based on the model, your server (PC) requires a certain RAM as it will put the model on your RAM, so at least your server will need to cover the size of model. (e.g., a 43GB model may require around the RAM size)